Over the school holidays, DSTA (Defence Science & Technology Agency, Singapore), a statutory board of the Singapore Government, ran BrainHack 2021 from 10 May to 25 June 2021. Part of this event was a Capture-The-Flag (CTF) tournament called Cyber Defenders Discovery Camp, or CDDC2021 for short. CDDC2021 was to run from 23 to 25 June, and the closest I have ever come to completing all the squares on the Bad CTF Bingo.

Background

DSTA outsourced the competition to BSW International for S$96,888.00, who apparently got their pals at Cympire and CyberPro Global to run it. The CTF was split into two categories, Junior (JC/IP) and Senior (ITE/Poly/Uni). Platforms were split by categories, junior and senior categories ran with separate challenges on different subdomains.

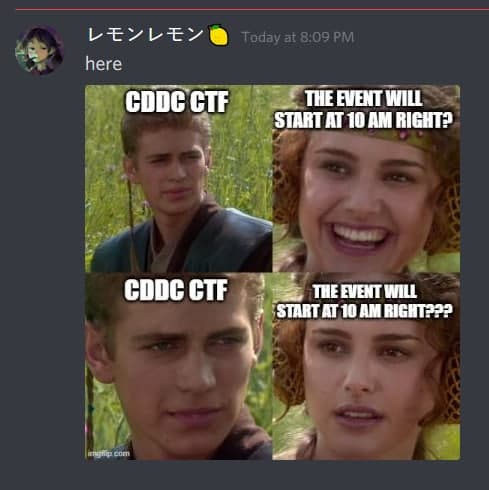

The CTF had challenges across OSINT, Reconnaissance, Linux, Web Vulnerabilities 1, Pwn, Forensics, and Reverse Engineering, which were to be released at 10am on the 23rd. Windows and Crypto categories were to be released at 10am on the 24th, and the final Web Vulnerabilities 2 category at 4pm on the 25th.

This competition was racked with problems from start to finish. My biggest problem with this competition is how they eventually ranked the winners despite overwhelming difficulties in creating any form of distinction between the top teams.

Dead on Arrival

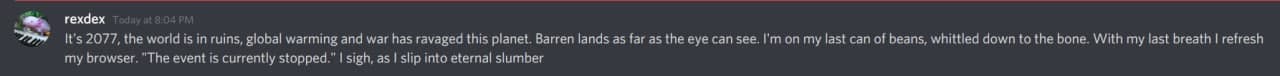

At 10am, the platform promptly died. On the senior side, no one was able to enter and there was radio silence from the competition hosts for about an hour. Eventually, we were told to check back at 11:30am, then 1pm, then 5pm, then 7pm (where we had to register all the teams again), then 8pm, and the competition was finally officially postponed to 10am on the 25th. It did come back up then, sans the SSL certificate and crawling for the rest of the competition. Competition timing was extended for senior category to account for this.

Plenty of Easy Challenges

OSINT had a challenge essentially stolen from here. Crypto challenges (guess the base, transposition cipher, bruteforcable XOR cipher challenges) were a joke. The difficulty of this competition was not in line with expectations from one organized by DSTA.

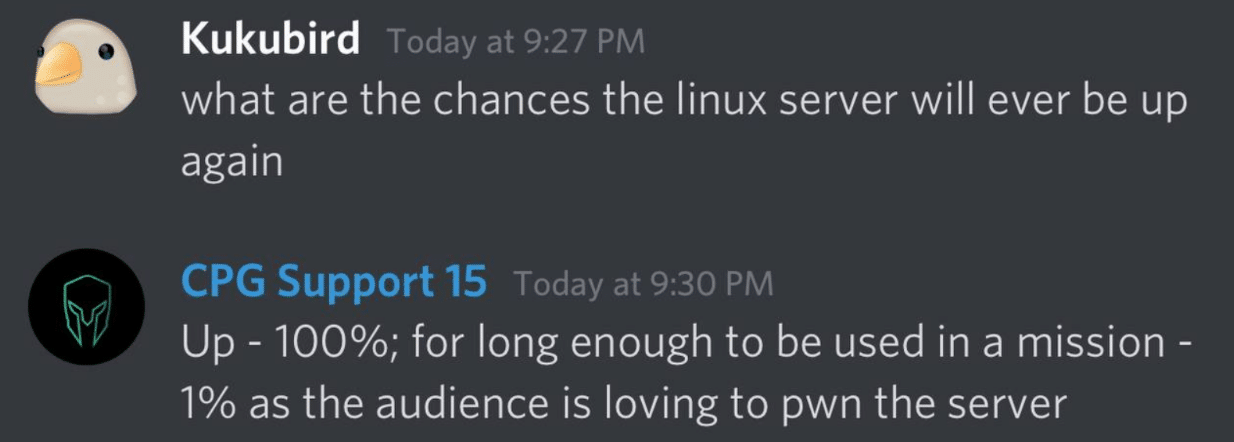

Linux

Given that most of the other challenges were quickly 100% completed, my team (Pentus)’s first bottleneck was on the Linux challenge.

Windows

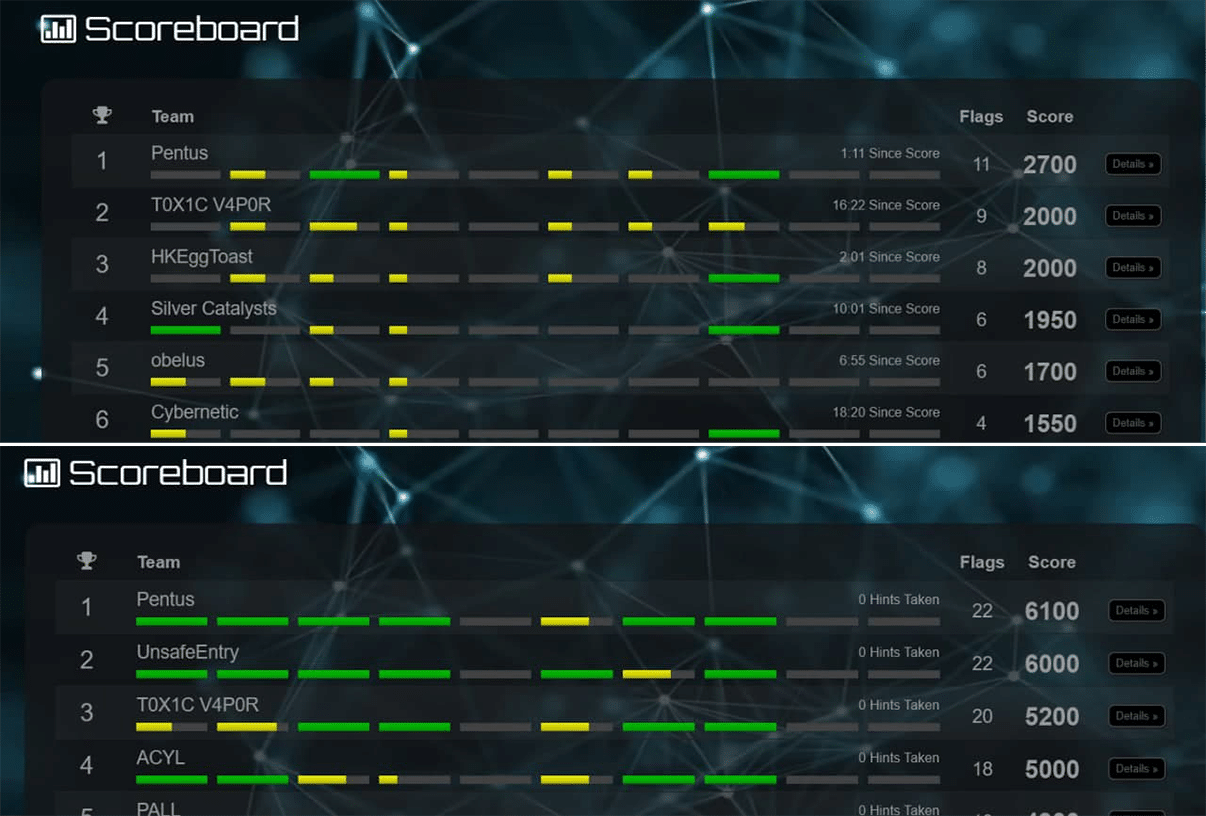

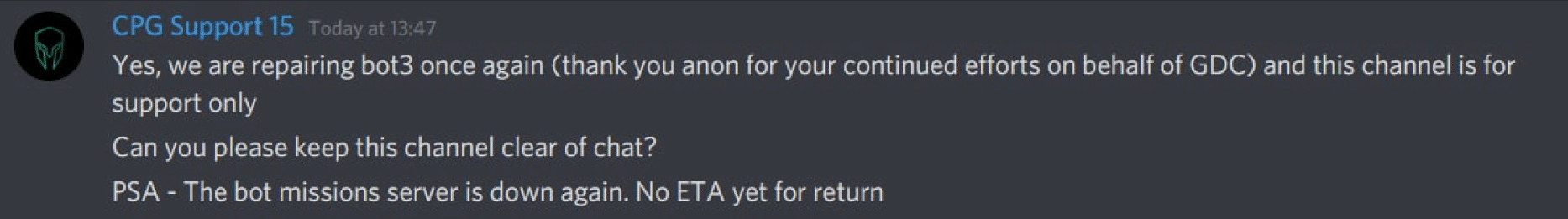

This set of challenges were released later alongside Crypto (which was easily solved). It served as the next major blocker to the competition, with the final challenge in the set going down after the first team solved it. This first team then proceeded to get stuck on their final Forensics challenge for a long time, but because it took support over 3 hours to fix this challenge, this team managed to get green across the board first with us coming in second as we were constantly refreshing to see if the server came back up. Eventually, there was a 6 way tie for full score before the release of the final category.

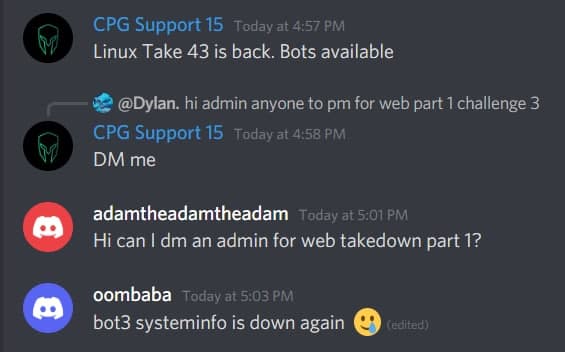

Web Vulnerabilities 2

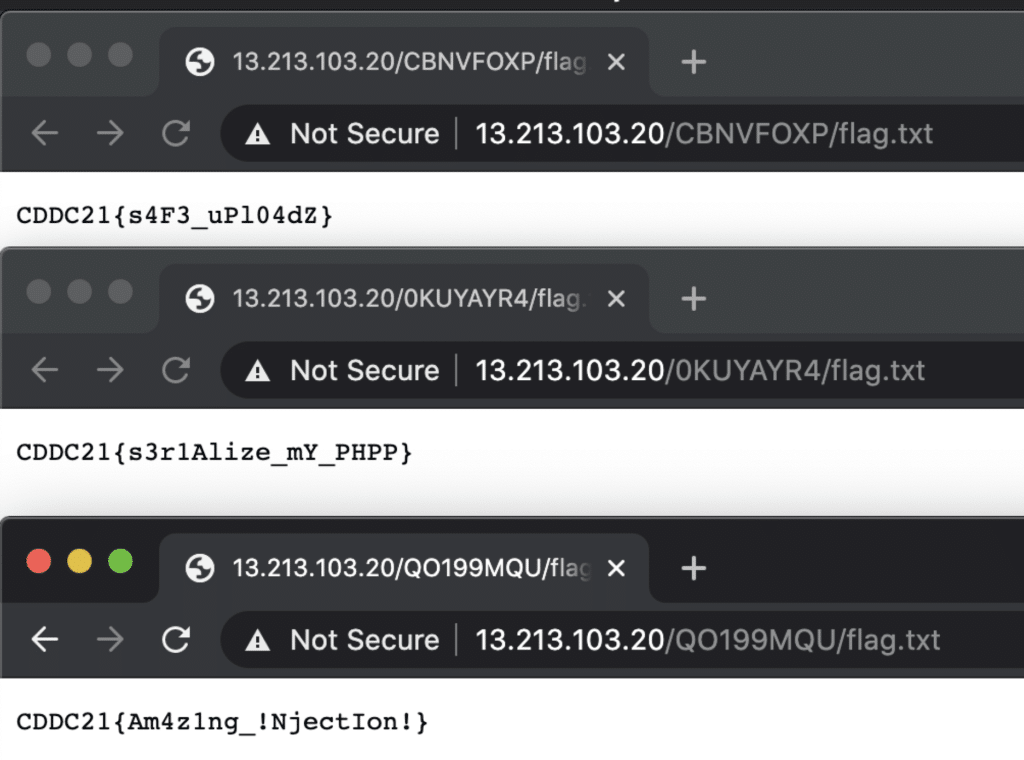

Then came the final category at 10am. My team solved the 3 challenges in 62 minutes. We realized teams were solving it way faster than us, each challenge seconds apart from each other. Later, we found that for each challenge (also conveniently located on the same webserver), the flag to each challenge was located at ./flag.txt of the challenge. Having that entry in dirb or finding one flag and making that connection would have completed the entire challenge set. Additionally, it was found that the challenge “Restrictions” could be used to run a web shell that accesses the contents of the other two challenges, since all are located (again) on the same server.

Because the scoreboard was not immediately suspended and the Notifications page continued to be updated, we could see the following pop up:

Flag Capture 10:03AM 06/25/21

Pentus has captured the ‘Restrictions’ flag in Web Takedown Episode 2

Flag Capture 10:27AM 06/25/21

Pentus has captured the ‘Tokenization’ flag in Web Takedown Episode 2

Flag Capture 11:02AM 06/25/21

Pentus has captured the ‘Regex’ flag in Web Takedown Episode 2

Flag Capture 10:09AM 06/25/21

UnsafeEntry has captured the ‘Regex’ flag in Web Takedown Episode 2

Flag Capture 10:11AM 06/25/21

UnsafeEntry has captured the ‘Restrictions’ flag in Web Takedown Episode 2

Flag Capture 10:11AM 06/25/21

UnsafeEntry has captured the ‘Tokenization’ flag in Web Takedown Episode 2

(Triple capture in 2 mins, with 11 min solve time for 3 challenges)

Flag Capture 10:28AM 06/25/21

McSpicy has captured the ‘Restrictions’ flag in Web Takedown Episode 2

Flag Capture 10:33AM 06/25/21

McSpicy has captured the ‘Regex’ flag in Web Takedown Episode 2

Flag Capture 10:34AM 06/25/21

McSpicy has captured the ‘Tokenization’ flag in Web Takedown Episode 2

(Likely, a Web Shell into Restrictions which allowed them to retrieve flags for Regex and Tokenization, hence the double solve within 2 mins – note that Team McSpicy is not one of the top 6 teams)

Flag Capture 10:41AM 06/25/21

HKEggToast has captured the ‘Tokenization’ flag in Web Takedown Episode 2

Flag Capture 10:41AM 06/25/21

HKEggToast has captured the ‘Regex’ flag in Web Takedown Episode 2

(The scoreboard/notifications stop being updated at 10:41AM, but we assume the next entry is HKEggToast capturing ‘Restrictions’ in the next few seconds)

PALL and ACYL have evidently managed to solve (or exploit the same issues from the above teams) in the next 20 mins, before we (Pentus) solved it at 11:02AM. This is the ranking as reflected in the so-called winner’s list, with the final rankings:

- UnsafeEntry (10:11AM)

- HKEggToast (10:41AM)

- PALL (10:42-11:00AM)

- ACYL (10:42-11:00AM)

- Pentus (11:02AM)

- T0X1C V4P0R (>11:02AM)

The fact that the above rankings follow the solve timings of the problematic Web 2 directly contradicts the official statement given at scoring announcement: “In deciding the winners of this year’s competition, we analyzed the scorings and the timing of the flag submissions closely. We concluded that the winners had clearly differentiated themselves throughout the competition.”

As I see it, no changes were made despite the massive amount of backlash in the Discord server and feedback from participants. Instead, participants were kept in the dark, further increasing stress and anxiety from an already fraught competition. We were left wondering if there would be another tiebreaker (the junior category had to go through a tiebreaker earlier because of similar challenge issues, see this screenshot), and the radio silence from the organizing staff was deafening.

Problems in Ranking

The point I want to make is that the Web 2 category should not have been used for deciding the final rankings. It was a poorly conducted challenge set that was solvable, legitimately, extremely quickly. There was no clear differentiator in the challenge, solved by the top 6 teams in just over an hour. The only thing it served was to be a tie-breaker, which ended up being a fastest-fingers-first scenario. Which would have worked, were the challenges not easily broken from an unintended manner. With not one but two major exploits plaguing Web 2, this was no way to “differentiate” competitors and rank them in numerical order.

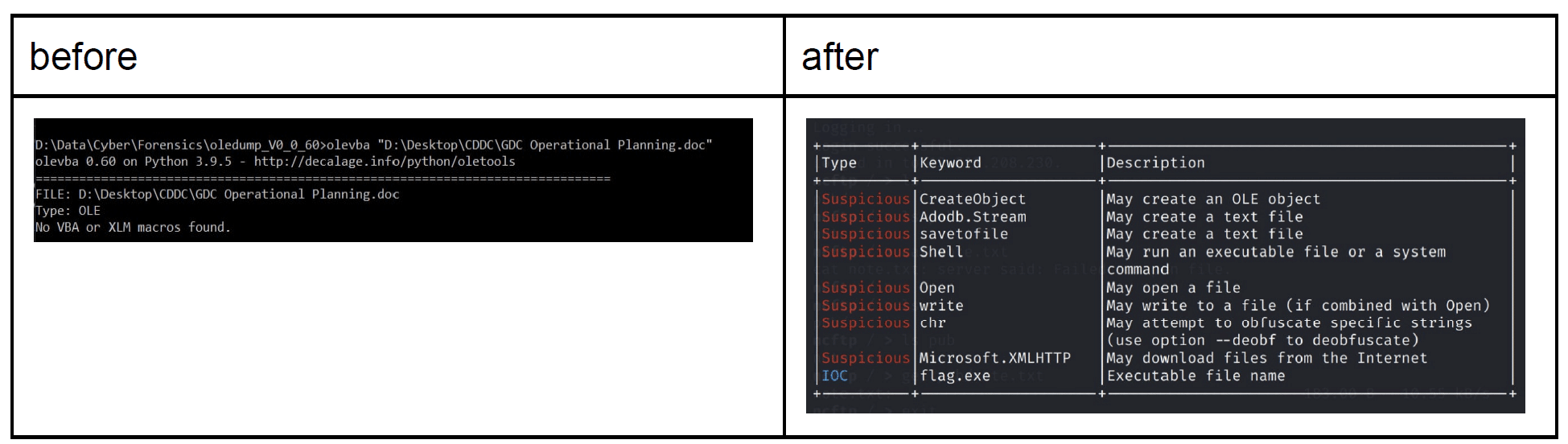

But the problem doesn’t end there – voiding Web 2 poses another problem. The bottleneck challenge directly before Web 2 was Windows, for the top 6 teams. Around 9pm on the 25th, I was the first to raise a complaint to support that the “Old Memories” challenge was not working. Participants were not able to access the box. Only one other team (UnsafeEntry) had completed the challenge. In this time, UnsafeEntry were stuck on the Forensics challenge, which they later solved. With their placing first due to the bottleneck, the other 5 top teams had to wait for the challenge to be fixed, which our team diligently monitored and was the first to solve at 12:13AM (which by the way, was a ridiculous easy challenge for 400 points), more than 3 hours after we raised the issue. Other teams solved it minutes after, only because I had personally mentioned that the challenge was fixed in the Discord chat, frontrunning the support channel.

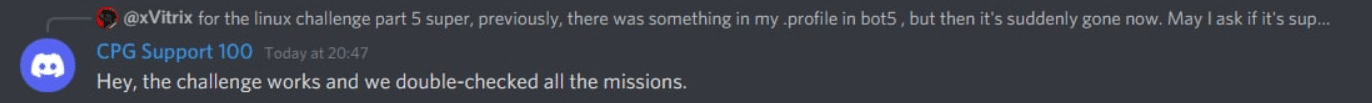

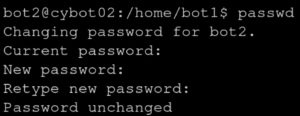

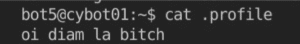

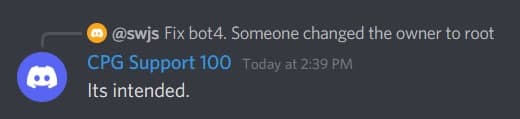

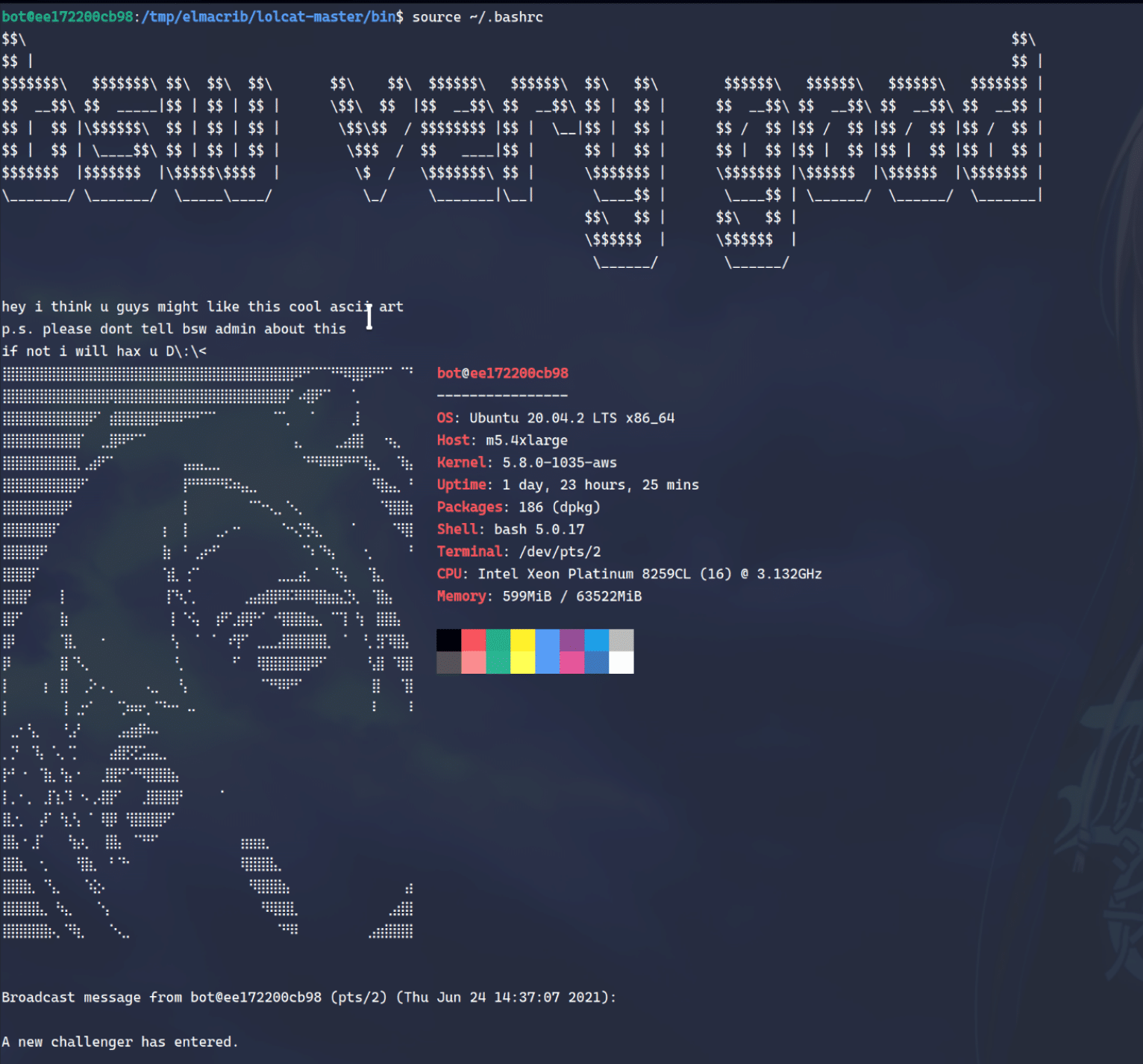

Because of this, the placement is also ambiguous. Should UnsafeEntry be the rightful first place, seeing how we were unable to complete a challenge while they were stuck on Forensics? And how about the other 4 teams who were working on being the fastest-fingers-first? What if we go even further back, when my team was hard stuck on Linux’s “bot4” because although my team member asked support repeatedly if the challenge was broken, he kept getting the reply that it was “working as intended”. It was later found that the challenge had been tampered with.

Because of the above issues, it is clear there is no plausible way to call a leaderboard in the traditional manner. It is impossible to deduce who had “clearly differentiated themselves throughout the competition”. It is impossible to have expected competitors to showcase their talents in a competition where we are unable to tell if the challenge is working as intended or not. Being stuck on a challenge for an extended period because it was not working is unfair. Having no support, constantly being ghosted or ignored, having slow (and inaccurate!) repairs, are just some of the big issues that contribute to this.

Therefore, DSTA should have

- Considered the top 6 teams as tied, with no separate ranking – it is not just the prize in dispute, it’s the position too

- Split the prizes accordingly

- Investigate any possible breach of contract with outsourced competition to BSW International / CyberPro Global / Cympire and repurpose funds to compensate winners and participants

Proceeding the way they have has sent a message that luck and deceit – not fairness – is the key to succeeding in meritocratic Singapore. But perhaps that, ultimately, is the truth of the Singapore fabric.

A Plethora of Other Issues

Where to begin.

Rampant Censorship

Throughout the competition, the extremely badly organized Discord server was filled with complaints and vitriol against BSW International. This was a Discord server that looked nothing like any other CTF’s I’ve been on. There was no support ticket system, no clear distinction of roles, channels were being made on the fly, and the announcements were everywhere except the actual announcements channel. One competitor likened the server to “a Reddit meetup”.

Posts about BSW International were being censored and deleted, and some competitors had the presence of mind to setup another Discord server to talk among ourselves as competitors. This turned out to be pretty important, because once the competition ended the organizers nuked every channel except announcements in a bid to destroy evidence and silence criticism. The platform was also nuked immediately after. A true Singapore experience!

Stolen CTF Platform

CTF platform used was essentially a rebranded version of the open source Root The Box. This wholesale copy included error messages, word for word. The only change was all instances of “Root the Box” was changed to “Cympire”, no credit given. Hope they got their money’s worth!

Bogo Sort Leaderboard

The leaderboard was magically sorted. The second level of sorting after score was essentially a bogo sort, because it was not sorted by time, challenges done, or even lexicographical.

Annoying Music

Every page of the CTF platform loaded this really annoying music track set to auto play. It sounds trivial, until you’re busy trying to access/refresh multiple pages and have this ridiculous drone of music in the background.

Pwn Task

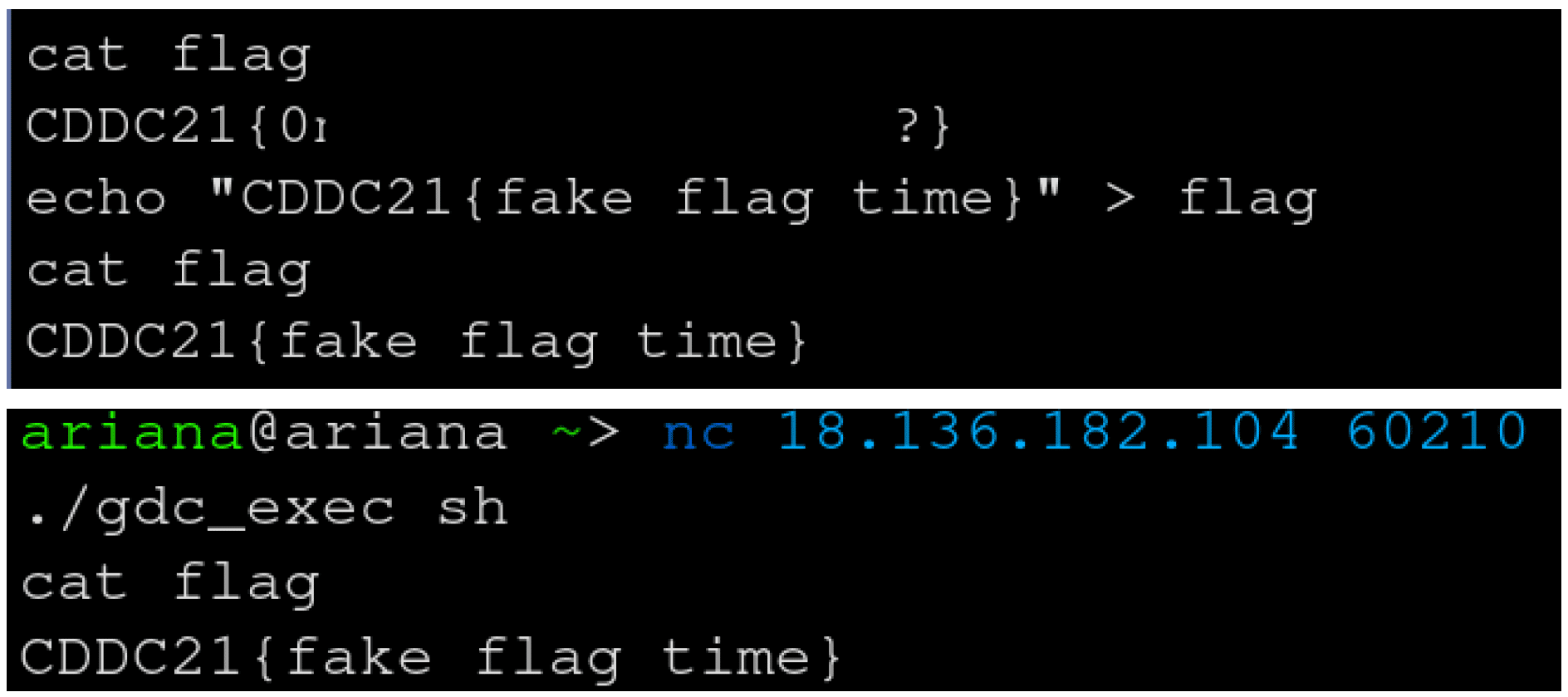

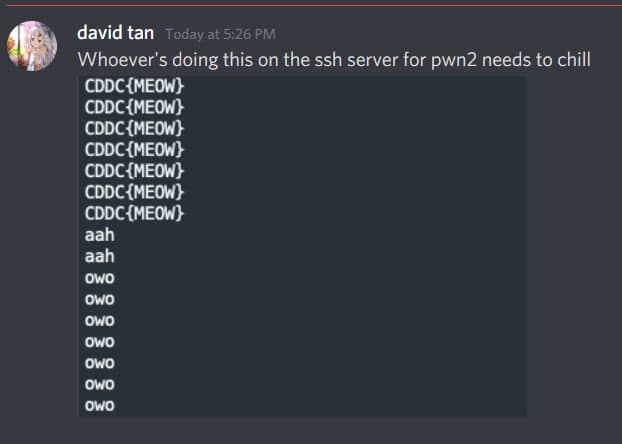

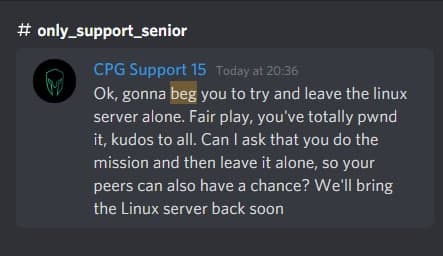

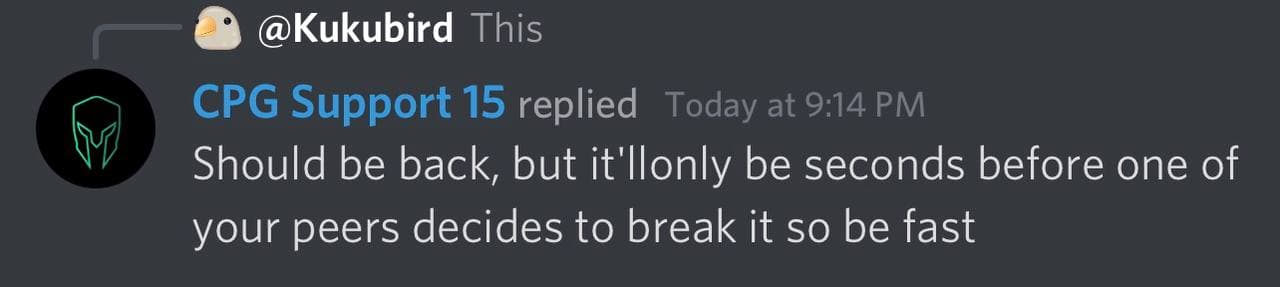

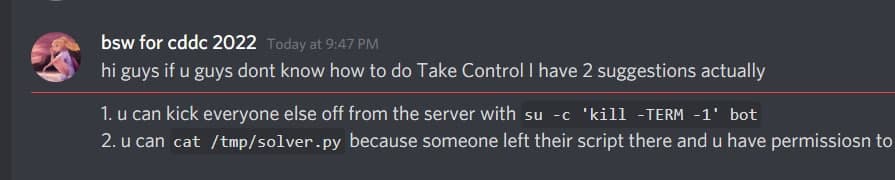

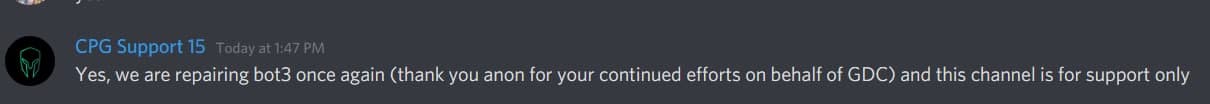

One of the Pwn tasks was apparently exploitable to gain access to root on the box. Needless to say, some people had fun with that knowledge and made the challenge very hard to access for other competitors.

Broken Forensics Challenge

The junior category had a very interesting Forensics challenge in the form of a Word document which you were supposed to find the flag in. Unfortunately, there was nothing in the Word document which competitors spent hours pouring over, because the organizers had forgot to attach the macro. They later updated the file hours later… Without telling anyone (see Discord conversation). As a side note, every file in this entire competition was named “file”.

“Clearly Differentiated Themselves Throughout the Competition”

What, did they think we wouldn’t have screenshots?

Other Highlights

Credits

Thanks to ariana and company for many of the screenshots.

Leave a Comment